Knowledge Engineer == Knowlengr

Standards-based strategic initiatives for cybersecurity & AI.

-

Book Review: Business Storytelling for Dummies

Homer’s Odyssey was preserved through an oral tradition of storytelling. NPR listeners are familiar with StoryCorps, a show that features some of the 45,000 interviews recorded by the organization of the same name, and The Moth, another show that features unscripted storytelling. At the opposite end of this humane tradition is “Death by PowerPoint.” This…

-

Feedspot: RSS Reader for Google Reader Diaspora

Software review: Feedspot is one of several browser-based Really Simple Syndication (RSS) readers that offers features similar to Google Reader, and — this was critical for many users — includes Outline Processor Markup Language (OPML) import.

-

Analyzing the Beast that is Cybersecurity

What sort of beast is “cybersecurity” anyway? Failure Analysis Is it simply a variation of software failure? According to this analysis, a security lapse is a software engineering failure, not technically different from an unintended “404” error or an “uncaught” exception. Protection Analysis Is it simply a failure to implement corrective measures? This analysis likens…

-

Celebrity’s Anonymous Pen Name ‘Outted’ by Software

The role that software plays in stylistic analysis of text is perhaps less surprising to high school and college students than to the general public. The former must submit essays they write to style analysis performed by software which looks for plagiarism and sometimes also makes quality assessments. In the recent outing of J.K. Rowling…

-

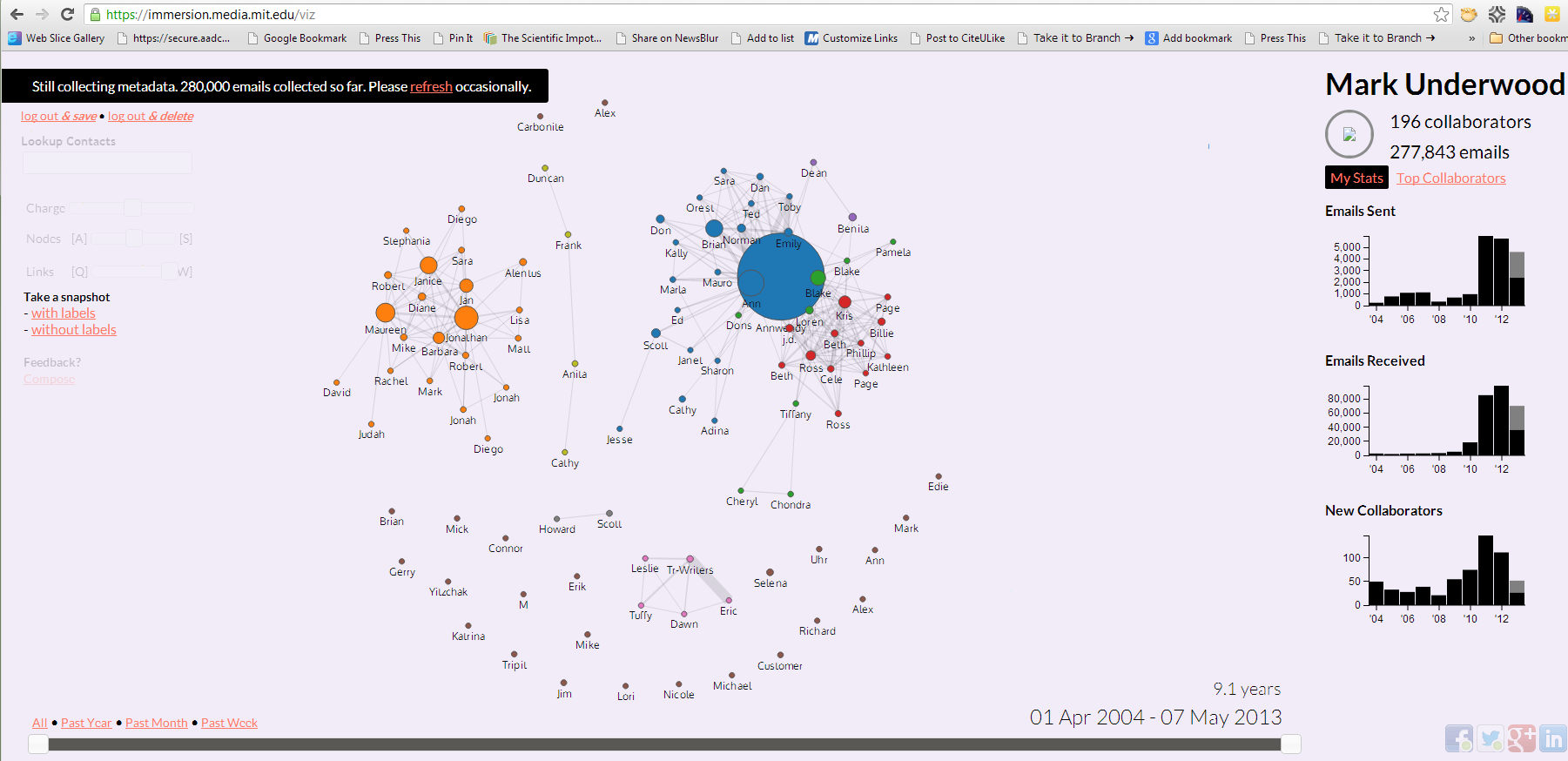

Cool Socnet Visualization from MIT’s Immersion Project

A previous post considered some practical implications for privacy and government surveillance stemming from the Snowden revelations about the Prism program. The point was made that some people who think they have nothing to hide could easily become ensnared in webs not of their own making, and could find it difficult to untangle themselves. Interest…

-

Nothing to Hide? Or Afraid of a ‘Metadata Sweep’

This post first appeared on the Port Washington Patch. In a recent discussion of the Edward Snowden Affair with family members, two basic attitudes toward the government’s selective spying on U.S. citizens emerged: The Innocence Argument “I have nothing to hide, so I don’t care what the federal government wants to know about me.” The Privacy Argument “The…

-

Bush the Elder’s “Vision Thing”

A colleague suggested a TED talk by Simon Simek on “leadership.” Can any talk or book about “leadership” be credible? I am suspicious of someone who casually proposes that humans are motivated “by biology not psychology.” As if these could be cleanly partitioned off from one another. I can perhaps overlook that oversimplification. But most organizations “believe”…

-

Recruiting #fail: On Recruiting for Proficiency

What follows is a position description received this month from a firm — not a recruiter. Required Technical Skills: Proficiency in all MS Office applications including MS Project Front end development (HTML, Flash, Ajax, Javascript – templates) Back end development (XML, HTTPS, Web Services, Web dav, data mapping) Experience with implementing and managing Demand Ware…

-

Will $100M Trickle Watson Down to SMB Enterprises?

Bloomberg News reported that IBM plans to invest an additional $100 million in its Watson technology. Earlier in 2011, Watson exceeded previously unmet expectations for artificial intelligence by easily overwhelming two Jeopardy!champions on national TV. While Watson-like technologies could be used in a variety of settings (e.g., network management or health care), the steep investments IBM has already made suggest…

-

Use (Corporate Knowledge) or Lose It

When a firm decides to shutter operations, the loss of knowledge capital in the form of talent should appear somewhere in the risk assessment. While significant short term savings may be achieved by closing a division (in the case of Microsoft, perhaps to save $$$ to purchase Skype?), one side effect can be a brain…

Got any book recommendations?